Complete, accurate, actionable and current data: the dream of every IT manager. The corporate world increasingly embraces the importance of data quality, but the optimization and above all the preservation of data quality requires discipline and planning. Short data quality optimization stints are like bad diets: they do yield short-term results but end up in a yo-yo effect in the long term.

Type 2 Solutions has helped businesses to optimize their data for many years. “We answer questions from businesses about data quality almost every day. That is a good thing, because it is badly needed, especially in today’s connected business environment where data problems can lead to severe issues, if not hefty fines.” says Jack de Hamer, partner at data management specialist Type 2 Solutions.

“ The increasing digitization of the business environment and stricter requirements of web giants such as bol.com and alibaba most certainly drive the increased awareness of data quality in the market. While master data often has to be provided only once, the quality and accuracy are frequently insufficient. More often than not there is no proper process in place to monitor and preserve data quality. ”

Where to Start

De Hamer observes that businesses often do not know where to start when it comes to data quality optimization. “A one-time cleansing exercise for a specific purpose is not a solution. There are more benefits to a long-term solution.” And by long-term solution, de Hamer means data quality monitoring software. Those solutions are able to monitor the quality of large data volumes and should be able to notify the owner of the data when exceptions occur.

De Hamer recognizes that data quality optimization is not the core business of most companies. “A manufacturer makes products, and all its processes are geared towards doing that as efficiently as possible. Data quality optimization requires a whole different set of skills.”

Prevent Decay

Data quality optimization is a continuous process. “A quick one-time cleanse will get you back right where you were in no time. Or maybe in a worse place. Data is like a living organism, it is constantly moving and evolving.

Therefore, constant monitoring and cleansing are necessary. Look at it like maintaining the woodwork on your house. Regular upkeep not only makes it look better, it also increases its life expectancy. But beyond that, it prevents much worse consequential damage like decay and leakage, all due to overdue maintenance.”

Strategic Advantage

It is not easy to quantify the damage caused by ‘overdue’ maintenance of data. It largely depends on the potential consequential damage. “Costs can range from a few euros per customer for mailings that are returned as undeliverable, to thousands or even hundreds of thousands of euros from the consequences of an incorrect invoice, customs declaration or label.”

Return on investment should however not be the main focus for companies, argues de Hamer: “I think it would be better if businesses improved the quality of their data to gain a strategic advantage and as a means to maintain and extend their customer base.”

A real-world example: NedZink

NedZink is a good example of a company that has committed to optimizing the quality of their data.

A number of their larger customers had asked NedZink to exchange data through EDI. It did not take long for NedZink to realize that the quality of their master data did just not cut it for this kind of operation.

Their data was appropriate for internal use, where it was usable despite a few extra handling steps, but an external party would not accept that.

NedZink decided to go all the way and to make data quality optimization a standard step in their process. The recurrent cleansing, in combination with the Data Quality Monitor, have dramatically increased the quality of the data.

The obtained level of quality and the speed at which the project was finalized made that NedZink was ready for electronic data exchange long before their own customers were.

Want to get started?

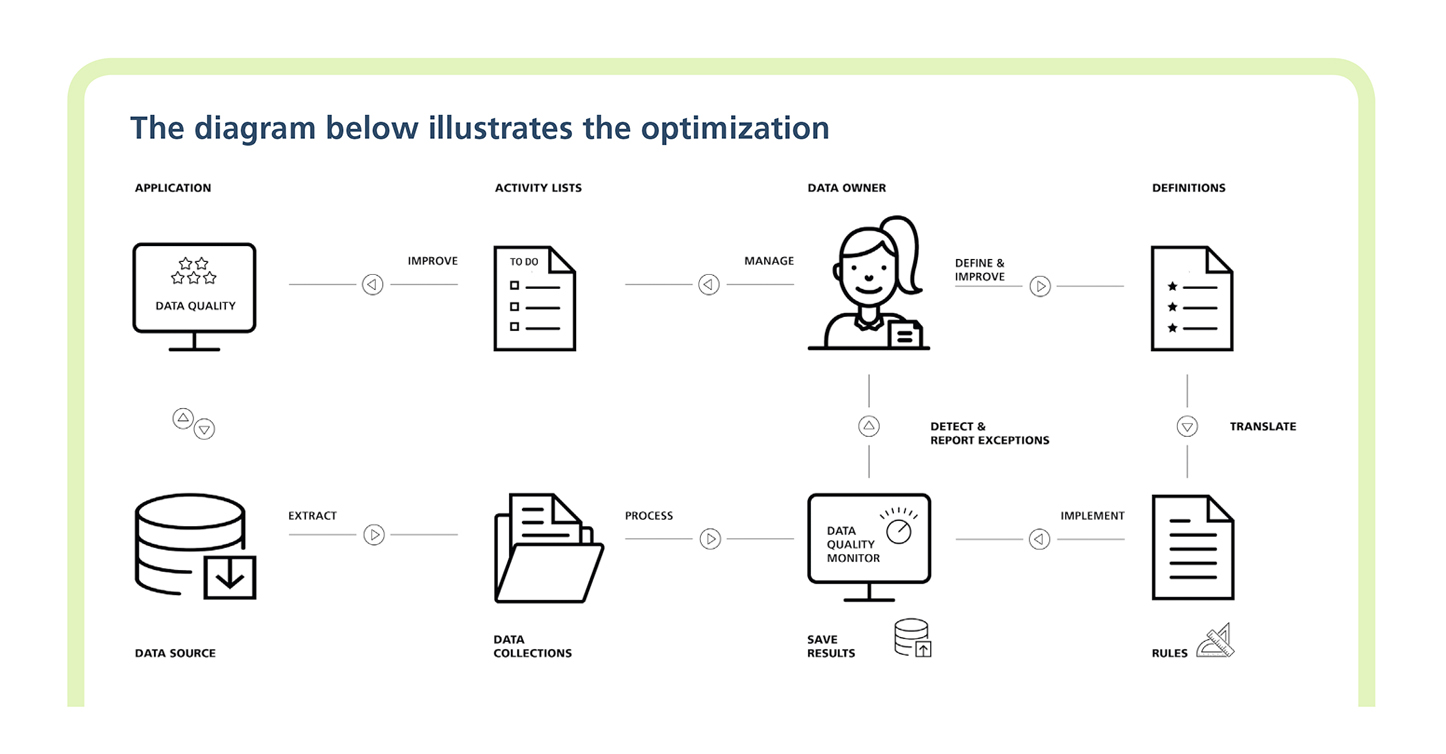

Optimize your data quality with this simple and practical 5-step guide we put together to help you to get a grip on data quality.

Step 1. Define the kind of data to be collected and the elements within that data which should be optimized

Step 2. Define rules for each data element and automate the control mechanisms

Step 3. Assign the responsibility for the optimization of a data entity to a single person

Step 4. Automate data validation according to their definition, and keep the results

Step 5. Correct exceptions and adjust their definition if necessary

Data Quality Scan

Do you want to get started with data quality? We offer a data quality scan to help you on your way.

In this scan we follow our 5-step plan and work according to a proven approach. In 5 days we offer you data management advice and we show you the results of the data analysis in the Data Quality Monitor.

Demo Data Quality Dashboard

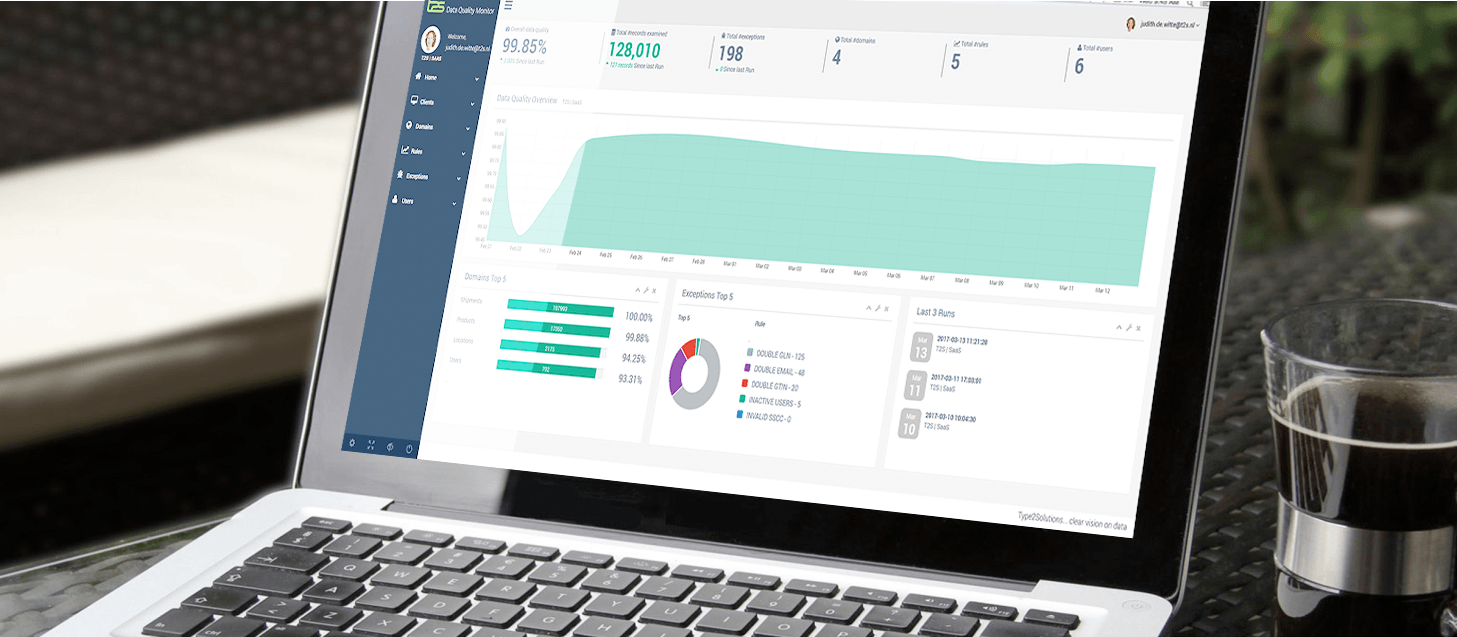

With data quality monitoring software, the quality of huge amounts of data can be continuously monitored and the master data manager immediately receives a signal if there are exceptions.

By deploying a data quality dashboard, you opt for a long-term solution that provides you with a strategic advantage.

Want to know how that works? Experience the Data Quality Monitor and try the demo.

Eichholtz and Data Quality

Eichholtz uses the Data Quality Monitor on a daily basis, to monitor, manage, and optimize the quality of customer and vendor data.

“We had a lot of data pollution in our ERP system. After a first data quality scan, performed by Type 2 Solutions, we knew how to approach the data quality issue and we started with customer data,” shares Jeroen Raijmakers, Director IT at Eichholtz.

Interview with Jeroen Raijmakers, IT Director at Eichholtz.

Webinar EDI and SSCC labels

Webinar EDI and SSCC labels  Thank you for visiting us at ICT & Logistics 2024

Thank you for visiting us at ICT & Logistics 2024